Recently, scientific analyses have increasingly shown a wide-ranging problem associated with deep learning, in which different phenomena – summarised under the term ‘shortcut learning’ – can reduce the problem-solving capabilities of machine learning techniques. The use of AI-based processes in critical applications such as in the financial or health sector, in particular, therefore poses significant risks which companies will need to address in a more in-depth manner in the future.

The phenomenon of shortcut learning

The phenomenon of shortcut learning can be illustrated vividly by image recognition of pictures of cows. If we train a deep neural network, generally speaking a so-called folding neural network, with example images of cows in a typical context, normally on or in front of a green meadow, then the neural network may not recognise the generic external characteristics of the cows themselves, but simply make an association between the colour green and cows. If an image recognition software which is based on such a neural network is then confronted with a cow on a blue background (cf. our AI-generated symbol image chosen here – source: Bing), then it will most likely not recognise it as such. Instead, a cat in front of green wallpaper might be incorrectly identified as a cow.

The phenomenon of shortcut learning can be illustrated vividly by image recognition of pictures of cows. If we train a deep neural network, generally speaking a so-called folding neural network, with example images of cows in a typical context, normally on or in front of a green meadow, then the neural network may not recognise the generic external characteristics of the cows themselves, but simply make an association between the colour green and cows. If an image recognition software which is based on such a neural network is then confronted with a cow on a blue background (cf. our AI-generated symbol image chosen here – source: Bing), then it will most likely not recognise it as such. Instead, a cat in front of green wallpaper might be incorrectly identified as a cow.

The AI has, therefore, taken a shortcut. Instead of actually developing the ability to recognise images, it has only learned a false causality based on a correlation present in the training data. This phenomenon, which is already known in comparative psychology and the neurosciences, poses increasing challenges for artificial intelligence. Generally speaking, one could call shortcut learning an anomaly that occurs under very general and fairly non-specific circumstances when a machine learning model learns easily recognisable, yet irrelevant correlations and then makes generalisations incorrectly based on them. To put it simply, the model chooses the path of least resistance, at the same time losing its ability to solve problems in real application scenarios.

A problem with serious consequences for companies

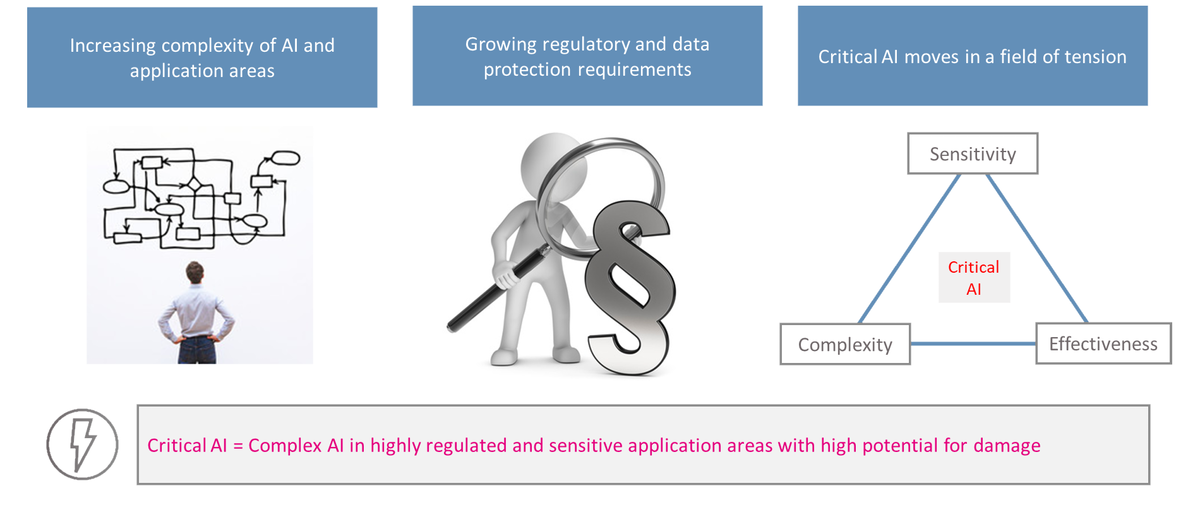

In the future, companies and providers of AI products will be confronted more and more often with the problem of how to handle the danger that ML models will fail due to shortcut learning. In the best-case scenario, unusable AI products will be discarded in the early testing phases and written off as a bad investment. This could result in high financial damages, since not only direct development costs need to be taken into consideration, but also often costs incurred due to poor strategic planning, in particular if pilot projects within an organisation-wide AI strategy are impacted. In the worst-case scenario, an AI product misguided by shortcut learning or some other issue is put into operation or marketed. This could result in devastating consequences especially in critical cases with little tolerance for error, such as in awarding credit, financial advising, or medical diagnostics.

Better risk management systems are needed

To develop and successfully implement long-term strategies for avoiding these issues as well as to develop risk management systems, the ongoing scientific discussion needs to expand more in the future to include practical and business-related aspects. Carefully reflecting on findings regarding the technology- and model-based causes of shortcut learning will be essential. However, designing and implementing measures to adjust the management and organisation of AI projects will be just as important. One initial concrete course of action would be to develop a dedicated base of knowledge for the purpose of enabling companies and organisations to better structure projects for developing AI applications in light of risk management and implementing quality standards, while linking subject-specific domain knowledge with known technical best practices.